Latest version of StarCCM+ starts supporting multi nodes job on POE/IBM/AIX environment. Previously, it was not possible to run StarCCM+ on more than one node in parallel. Therefore, the maximum number of cores is limited to 16 on hydra[1]. But, with StarCCM+ ver 5.02, it became possible to spawn parallel processes on remote compute node through POE parallel environment on IBM cluster. Now, the question is how scalable StarCCM+ is?

Experimental environment

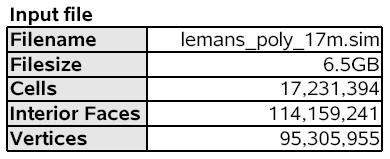

For experiment , lemans_poly_17m.sim file has been tested, which is one of simulation input file frequently used for benchmark. The file size is 6.5GB and it has 17 millions of cells and 95 millions of vertices.

The primary purpose of experiment is to identify how scale StarCCM+ is on hydra, however, the relative performance of two different machines is also evaluated. Each compute node on eos[2] has two quad core Intel X5560 based cluster and each node is connected via Infiniband, whereas, compute node on hydra has 8 dual core Power5+ and HPS is used as a interconnect.

Results

The benchmark results are evaluated from two different perspectives; scalability, relative performance.

Scalability

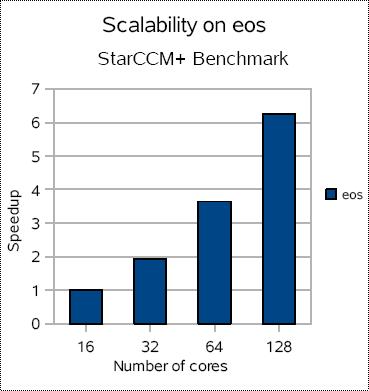

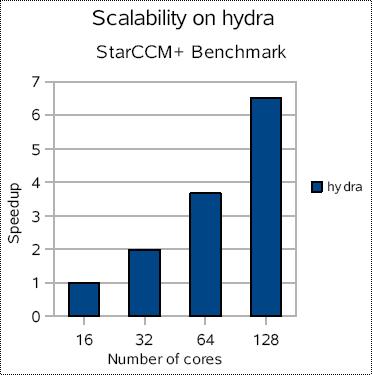

The scalability on eos and hydra shows not much difference each other. The performance with 16 cores is used as a basis for speedup comparison.

On eos, speedup is easily doubled as the number of core increase and it stays very scalable up to 64 cores. With 128 cores(8x), it slows down and shows only 6.2x speedup.

On hydra, it shows very similar result and stays very scalable up to 64 cores and starts slow down around 128 cores.

Based on this result, there is no significant difference on the scalability of StarCCM+ on eos and hydra. However, it could be changed with different input files, I/O patterns, and characteristics of data in input file.

Performance

The definition of 'performance' here is, simply, who finish simulation fast. With same input file, how long it take on each machine? The differences between eos and hydra varies as the number of cores increase. eos is about 2.51x faster than hydra on 16 cores and 2.41x faster on 128 cores.

Conclusion

Benchmark results show that eos performs better than hydra to run StarCCM+ with given input file. However, the differences in hardware specification between 2 machines such as L3 cache size, interconnect, number of cores per node, can change the result with different characteristics of input file.

References

1. http://sc.tamu.edu/help/hydra/

2. http://sc.tamu.edu/systems/eos/hardware.php